How to Install and Use Llama-Stack for Your AI Development Journey 🚀

Saturday, Dec 21, 2024 | 6 minute read

Unlock the future of AI development! 🌟 This innovative open-source framework streamlines building generative AI applications across platforms, featuring a flexible API, plug-and-play solutions, and comprehensive documentation. Experience unmatched efficiency and creativity! 🚀💡

In the realm of generative AI, tool selection can greatly influence development efficiency and product innovation.

In today’s rapidly evolving AI landscape, Llama-Stack brings developers an exciting tool! ✨ It’s an open-source project designed to facilitate a smooth and efficient development experience for building generative AI applications through a range of core building blocks. Rest assured, Llama-Stack supports multiple platforms, aiming to simplify complex technical processes and truly focus on innovation and product development! 🌟

🛠️ Llama-Stack: Seamless Multi-Platform Support

Llama-Stack offers a unified and flexible development experience, allowing users to seamlessly transition between desktop, mobile devices, and other environments. 🎉 Developers can choose between local or cloud environments based on their needs, reducing the learning curve across different platforms. This means that the flexible API design enables developers to quickly adapt to changing requirements and simplifies the deployment processes, allowing them to focus on the innovation and feature development of their products! 💼💡

🌈 Unique Features of Llama-Stack: Breaking the Norms to Redefine Development Experience

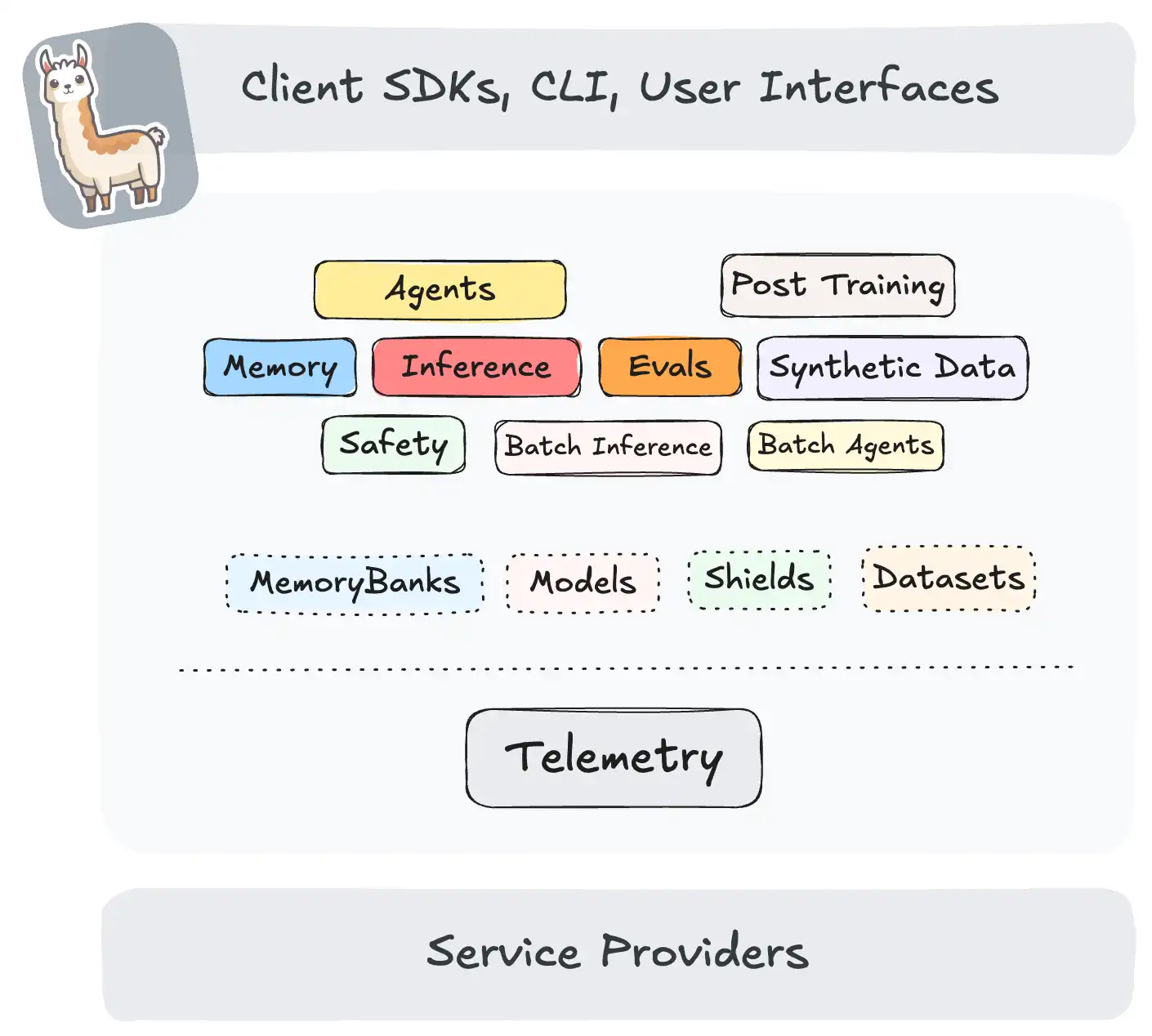

Standing out among various AI development frameworks, Llama-Stack boasts unique features. First, the thoughtfully designed REST API makes integration and calls incredibly straightforward, meaning developers no longer have to worry about complex underlying implementation details! ✨ With a modular architecture, you can quickly get started while significantly lowering the learning curve.

Even more exciting, the plug-and-play solutions offered by Llama-Stack provide ready-to-use deployments for popular scenarios, ensuring developers can swiftly complete their deployment tasks. 🔄 Moreover, its efficient inference, scalable storage, and monitoring solutions, especially focused on supporting Llama models, truly ensure that developers enjoy an exceptional user experience and high development efficiency! ⚡️

📚 Why Choose Llama-Stack: The Source of Developer Confidence

Llama-Stack has become a top choice for developers with its rich API options! 🔍 First, developers can select appropriate operations according to their needs, greatly enhancing flexibility. Additionally, comprehensive documentation such as command-line references and quick start guides allows both beginners and seasoned developers to quickly hit the ground running. 📖

And that’s not all! Llama-Stack offers various deployment options, allowing developers to flexibly choose suitable operating environments, further enhancing project adaptability. The focus on mainstream Llama model architecture enables developers to fully tap into the potential of this excellent model, driving rapid enhancements and iterations in AI applications! 🔥 With all these factors combined, Llama-Stack undoubtedly represents a source of confidence for developers. 🏆

Llama-Stack is unquestionably the best partner for your next AI development journey, so let’s embark on this exciting adventure together! 🌍💖

🌟 How to Use Llama-Stack: A Complete Guide from Installation to Configuration

Install Llama-Stack ☁️

To get started with Llama-Stack, we first need to install it! Users can easily install it using the pip command by entering the following in the terminal:

pip install llama-stack

💡 Note: This command will download and install the latest version of Llama-Stack, including all necessary dependencies 📦. Ensure your Python environment is set up, and pip is updated to the latest version to avoid any installation errors.

If you prefer to install from the source, make sure you have conda installed, and then follow these steps:

# Create a folder named local in your home directory

mkdir -p ~/local

cd ~/local

📂 Comment: The command mkdir -p ~/local creates a folder to store our project; this command will create a folder named local in your home directory. The cd ~/local command navigates to this folder.

# Clone the Llama-Stack project to your local machine

git clone git@github.com:meta-llama/llama-stack.git

🛠️ Note: This command uses git to clone the source code of Llama-Stack to your local machine, allowing you to modify or view the source code as needed.

# Create a conda environment named stack and specify Python version 3.10

conda create -n stack python=3.10

conda activate stack

✨ Comment: This command creates a new conda environment named stack, specifying Python 3.10 as the version. The subsequent conda activate stack command activates this environment, making it the one you are currently using.

# Enter the llama-stack project directory

cd llama-stack

📁 Note: This command navigates you to the Llama-Stack project folder that you just cloned, enabling you to proceed to the next steps.

# Install the dependencies from the project

$CONDA_PREFIX/bin/pip install -e .

⚙️ Comment: This command uses pip to install the dependencies from the Llama-Stack project to ensure that everything runs smoothly. The -e flag indicates an editable installation, meaning any modifications to the source code will take effect immediately.

If you haven’t installed Miniconda, you can do it using Homebrew:

brew install miniconda

🍺 Note: Make sure you have Homebrew, a package manager for macOS, which can be used to install Miniconda for environment management.

Setup Steps ⚙️

Once you have completed the basic installation of Llama-Stack, we need to perform some additional configurations to ensure that our environment is correctly set up and operational. Here are the detailed steps:

# Clone the llama-stack-apps repository to your local machine

git clone https://github.com/meta-llama/llama-stack-apps.git

📦 Comment: This command clones another repository, llama-stack-apps, to your local machine, which is part of the project containing sample applications.

# Create a conda environment named llama-stack

conda create -n llama-stack python=3.10

🔖 Note: Similar to the previous command, we’re creating another conda environment named llama-stack. This helps keep different projects separate for easier management.

# Activate the conda environment

conda activate llama-stack

✅ Comment: With this command, we activate the llama-stack environment to ensure that all subsequent installations and executions utilize the packages and settings from this environment.

# Enter the llama-stack-apps directory

cd llama-stack-apps

📂 Note: Switch to the just-cloned llama-stack-apps folder, where we will perform additional dependency installations.

# Install required modules based on the requirements.txt file

pip install -r requirements.txt

📝 Comment: The -r parameter reads the dependencies listed in the requirements.txt file, automatically installing all the necessary libraries. This is an important step to ensure your environment is configured to meet the project’s needs.

Using Ollama 🦙

With the basic setup of Llama-Stack complete, we also need to install and configure Ollama. This tool will help users interact with earlier, smaller LLM models.

Configuring Your Stack ⚙️

Once the installation and setup are complete, the next step is to configure the stack. By pairing the inference provider with the Ollama server, we ensure that the models can run smoothly. Here is the configuration code:

# Enter your configuration commands here

🛠️ Note: At this step, specific configuration commands will be provided based on your installation, and you will need to enter relevant commands and email configurations accordingly. Be sure to configure all parameters correctly to ensure the system works properly.

Now go and experience the fun of Llama-Stack, creating amazing AI applications! 🚀🌈