How to Install and Use LocalAI: Unlocking a New Era of AI Technology 🚀

Saturday, Jan 11, 2025 | 5 minute read

Self-hosted AI revolutionizes accessibility! 🌍 This open-source project enables users to harness diverse AI tasks like text, image, and audio processing without robust hardware. Experience seamless deployment, community support, and innovative features for every AI enthusiast! 🚀✨

Unlocking a New Era of AI Technology: A Fascinating Introduction to LocalAI 🔍

In today’s world, where we increasingly rely on artificial intelligence (AI) technology, more and more people are eager to take control and innovate. With the rapid development of AI applications, many have found mainstream services often lack flexibility and fail to meet their diverse needs. This is where an emerging solution comes in—LocalAI, which opens up a whole new realm of possibilities for exploring AI! ✨

LocalAI is an exciting open-source project that offers users a powerful self-hosted solution, making it easier to substitute OpenAI. This technology shines because it allows users to perform various AI tasks locally without needing expensive high-end hardware or GPU. This significantly enhances the accessibility of AI technology, enabling more users to enjoy the conveniences of cutting-edge science! 🖥️

LocalAI’s versatility supports numerous types of AI tasks, including 📄 text generation, 🖼️ image processing, and 🎵 audio processing. Whether you’re a developer, artist, or simply an AI enthusiast, you’ll find solutions that cater to your unique needs! 🌈

LocalAI’s Superpowers: Unveiling its Unique Features 💡

LocalAI boasts a range of impressive capabilities that make it stand out among AI tools! In terms of text generation, it supports a variety of model families, providing users with the power to generate rich, diverse, and personalized textual creations. For audio processing, LocalAI offers text-to-speech and audio transcription features, making voice synthesis and conversion a breeze 🎤.

With the help of ⭐ stable diffusion technology, LocalAI can generate high-quality images, particularly suited for artistic creation and visual content generation! Interestingly, LocalAI also allows users to create vector databases for efficient data embedding and retrieval 🔄.

The decentralized P2P inference technology provides a flexible way to handle AI processing, improving the efficiency of model usage. Meanwhile, the friendly integrated web interface allows users to interact and manage AI models intuitively 🌐. It’s truly a delightful exploratory experience with AI!

Why Developers Love LocalAI: The Ultimate Reasons to Choose It 🔑

Developers have a multitude of reasons for choosing LocalAI! First and foremost, LocalAI offers straightforward installation methods, including single-click rapid deployment and Docker support, making it highly accessible for novice developers. Secondly, the active community support allows users to leverage multi-platform integration, easily expanding functionality and applications.

Additionally, the project’s capabilities and model support are continually evolving, with regular updates ensuring the platform’s stability and performance 💪! What’s even better is the abundance of documentation and user assistance resources, which help both newcomers and experienced developers navigate the platform easily and promptly address any issues.

Most importantly, LocalAI fosters an open and collaborative AI community atmosphere, encouraging users to participate and share their experiences. With these advantages, LocalAI has become the platform of choice for many developers and AI enthusiasts, thanks to its efficiency, convenience, and robust capabilities—perfect for a wide range of project implementations and optimizations 🚀✨!

A Simple Guide to Installing and Using LocalAI 🖥️

Installing LocalAI via Bash 🖥️

Want to quickly install LocalAI? It couldn’t be easier—simply run the following command:

curl https://localai.io/install.sh | sh

-

In this command,

curlis a powerful command-line tool designed to download files from servers. With this command, we’re downloading a shell script namedinstall.shfrom the LocalAI website. -

This script includes all the necessary installation steps by default, so you won’t have to manually enter each step. By using a pipe (

|), we pass the downloaded script directly tosh, which runs the Shell.

This step is easy and straightforward, letting us dive right into the world of AI! 🌍

Starting LocalAI Using Docker 🐳

LocalAI offers several different Docker images, and here are some common start commands:

# CPU only image:

docker run -ti --name local-ai -p 8080:8080 localai/localai:latest-cpu

-

We use the

docker runcommand to create and start a new container instance. -

The

-tioption allows us to have an interactive terminal, enabling direct interaction with the container. -

--name local-aigives our new container a name for easier management later on. -

The

-p 8080:8080option maps the container’s 8080 port to the host’s 8080 port, allowing us to interact with LocalAI by accessing this port on the host. -

localai/localai:latest-cpuis the Docker image we want to use, perfect for environments using only CPU!

If you’re using an Nvidia GPU, you can also try the following command:

# Nvidia GPU:

docker run -ti --name local-ai -p 8080:8080 --gpus all localai/localai:latest-gpu-nvidia-cuda-12

- By adding the

--gpus allparameter, you can enable all available GPUs, allowing LocalAI to operate smoothly!

There’s also a larger image option:

# CPU and GPU image (bigger size):

docker run -ti --name local-ai -p 8080:8080 localai/localai:latest

- This image supports both CPU and GPU but has a larger storage footprint, so don’t forget to choose according to your needs!

Additionally, if you want to quickly experience multiple pre-configured models, you can use:

# AIO images (pre-download a set of models ready for use):

docker run -ti --name local-ai -p 8080:8080 localai/localai:latest-aio-cpu

- The AIO version will help you quickly download multiple models, making it super convenient for newcomers! 🚀

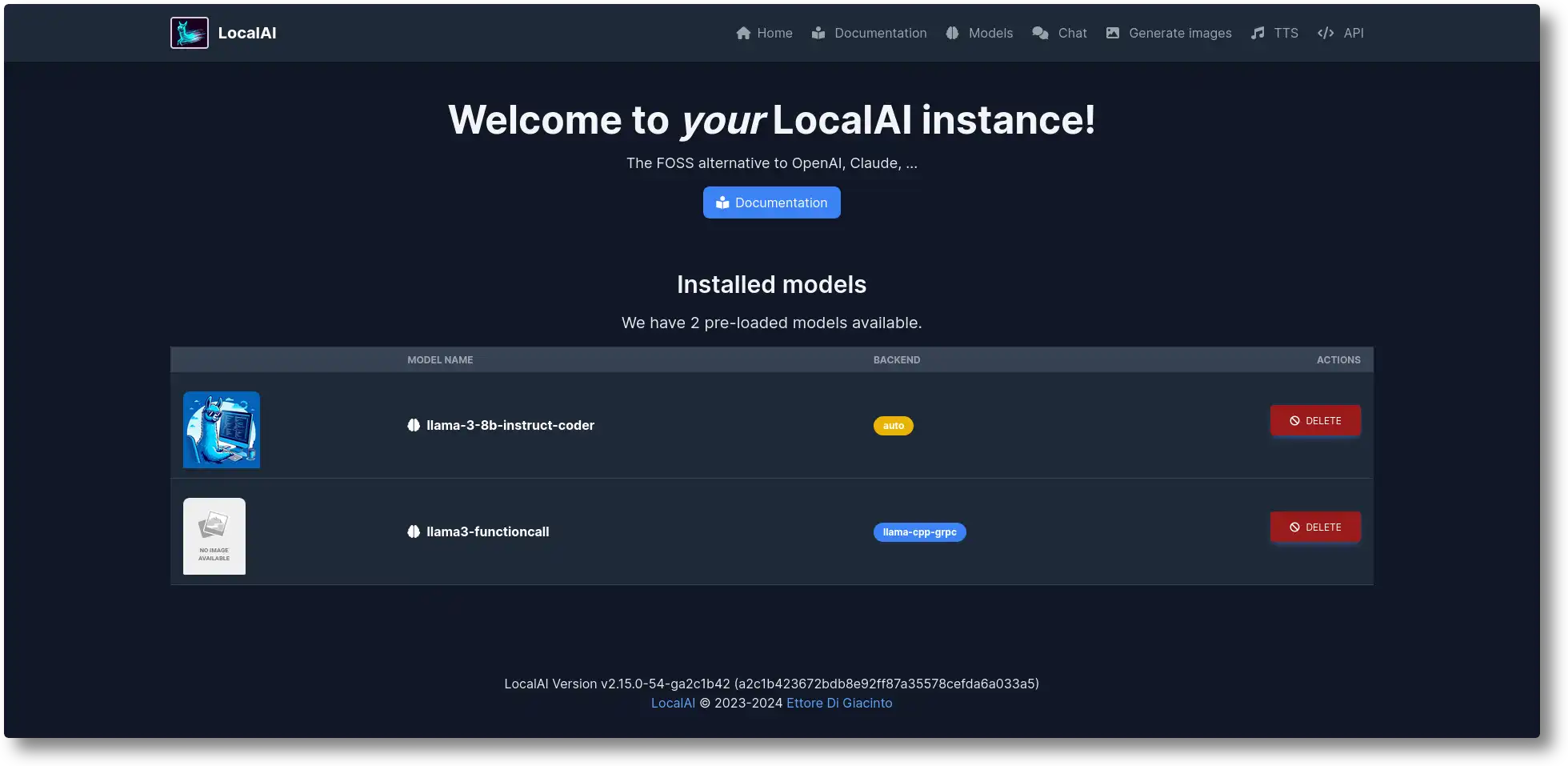

Loading Models 🌟

Once LocalAI is up and running, you can load specific models using the following commands:

local-ai run llama-3.2-1b-instruct:q4_k_m

local-ai run huggingface://TheBloke/phi-2-GGUF/phi-2.Q8_0.gguf

local-ai run ollama://gemma:2b

local-ai run https://gist.githubusercontent.com/.../phi-2.yaml

local-ai run oci://localai/phi-2:latest

- Using the

local-ai runcommand makes it easy to load models from different sources. Each command points to a specific model path, allowing you to choose the model that suits your AI task!

With these simple steps, you can quickly set up your LocalAI development environment and start your own journey of AI exploration—what a fun experience! ✨