How to Install and Use PromptWizard?

Thursday, Jan 2, 2025 | 6 minute read

Unleashing AI Potential with This Innovative Framework! 🚀 An open-source tool that enhances prompt engineering through feedback-driven optimization, making it a game changer for efficiency and performance in Large Language Models! 💡🔥

Explore the Charm of PromptWizard ✨

“In this era of rapidly developing smart technology, how can we enhance our work efficiency and creativity? 🤔”

Artificial Intelligence (AI) has become the core driving force in the modern tech landscape! More and more businesses and developers are seeking effective ways to utilize Large Language Models (LLMs) to tackle complex problems. Against this backdrop, PromptWizard as an emerging open-source framework is gaining increasing attention! 🎉 Its unique features and design philosophy showcase revolutionary potential in the fields of prompt engineering and example generation, making it irresistible to try! 🌍

PromptWizard: What Makes It So Revolutionary? 🚀

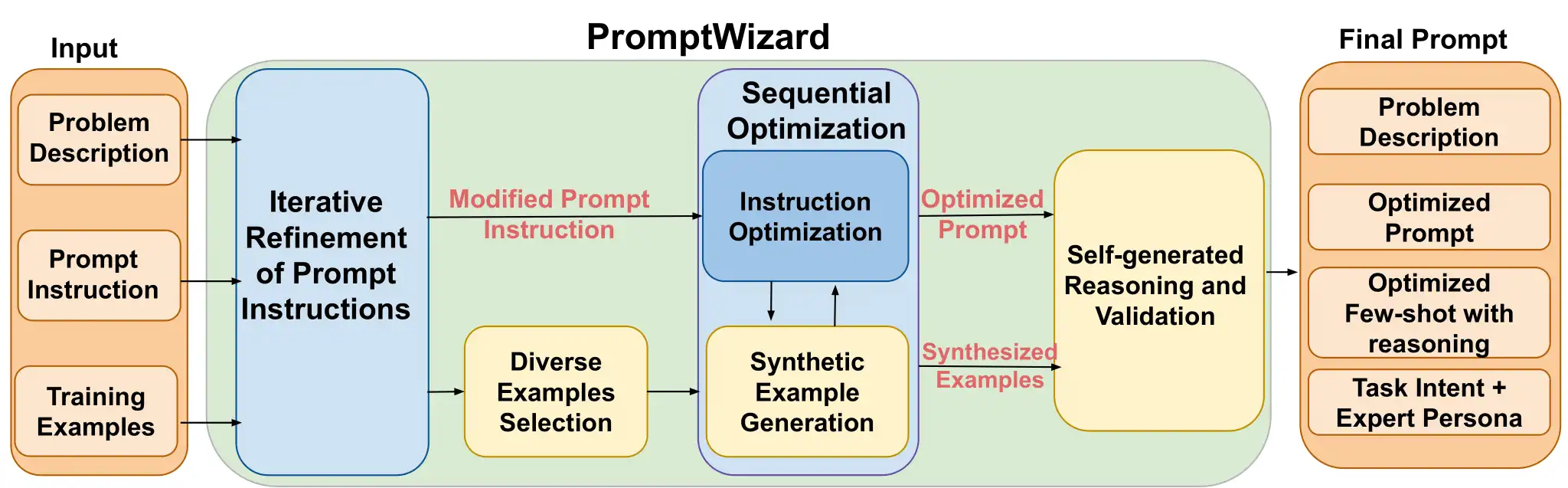

PromptWizard is an exciting open-source framework aimed at automating and optimizing the prompt engineering and example generation processes in Large Language Models (LLMs). 🤖 With its feedback-driven critique and synthesis mechanism, PromptWizard achieves iterative prompt optimization, ensuring a perfect balance between exploring new approaches and leveraging known effectiveness! This innovative approach not only significantly enhances task performance but also drastically reduces computational costs, paving the way for a variety of applications! 💡

Unique Features of PromptWizard: Disruptive Key Characteristics 🔑

PromptWizard’s feedback-driven iterative optimization model allows the model to generate, evaluate, and improve its own prompts and examples, greatly enhancing the accuracy of instructions! ✨ Even cooler, it employs a joint optimization technique that simultaneously generates and refines complementary synthetic examples, facilitating their synergistic advancement, and considerably boosting overall performance📈! Additionally, it integrates Chain-of-Thought (CoT) reasoning, enhancing the reasoning process and significantly elevating the model’s problem-solving abilities, leading to a rapid increase in efficiency⚡️!

Developer’s Choice: Why Choose PromptWizard? 💻

PromptWizard’s outstanding performance truly makes it an excellent choice for developers! 🌟 It not only enhances overall task performance while significantly lowering computational costs, it also optimizes the efficiency of prompt design! During the design of effective prompts, PromptWizard shows clear advantages, making prompt generation more precise and efficient. 🔍 Especially when facing continuously changing task and model requirements, PromptWizard provides sustainable solutions, ensuring users can smoothly adapt to the rapidly evolving technological landscape! 🛠️

With these revolutionary features and characteristics, PromptWizard is rethinking the application of Large Language Models, becoming a powerful tool for developers and researchers! 🧠

Steps to Install PromptWizard ✅

Before starting to use PromptWizard, we first need to install it! 🎉

Step 1: Clone the Repository 🚀

To get a local copy of PromptWizard, simply execute the following command in your terminal:

git clone https://github.com/microsoft/PromptWizard

cd PromptWizard

- The

git clonecommand fetches the PromptWizard code from GitHub to create a local copy. cd PromptWizardchanges your current working directory to the newly cloned folder, allowing you to execute subsequent operations there.

Step 2: Create and Activate a Virtual Environment 🌍

Using a virtual environment is a good practice as it helps you manage Python package dependencies and isolate different projects.

On Windows:

python -m venv venv

venv\Scripts\activate

- The

python -m venv venvcommand creates a new virtual environment called “venv.” - The

venv\Scripts\activatecommand activates the virtual environment, ensuring that packages installed in this environment do not conflict with global system packages.

On macOS/Linux:

python -m venv venv

source venv/bin/activate

- The same command creates a

venvvirtual environment. source venv/bin/activateis used to activate this virtual environment, helping you focus on project dependency management.

Step 3: Install the Package in Editable Mode ⚙️

Next, you will need to install PromptWizard in editable mode, allowing you to modify the code conveniently without reinstalling it!

pip install -e .

- The

pip install -e .command installs the PromptWizard package in editable mode, meaning any changes you make to the source code will take effect immediately in your environment.

Quick Start 🏃

Once the installation is complete, you can begin using PromptWizard! ✨

Use Cases:

- Scenario 1: Optimize prompts without examples.

- Scenario 2: Generate synthetic examples to optimize prompts.

- Scenario 3: Optimize prompts using training data.

For more detailed instructions, you can check the provided notebook.

High-Level Overview 🌟

Before diving in, you can follow these steps:

- Choose a Scenario: Select the scenario that suits your use case.

- Configure the Environment: Set up the required configurations and environment variables for API calls using a configuration file named

promptopt_config.yaml. Here’s an example configuration for the GSM8k dataset:

USE_OPENAI_API_KEY="XXXX"

OPENAI_API_KEY="XXXX"

OPENAI_MODEL_NAME ="XXXX"

AZURE_OPENAI_ENDPOINT="XXXXX"

OPENAI_API_VERSION="XXXX"

AZURE_OPENAI_CHAT_DEPLOYMENT_NAME="XXXXX"

- In this YAML configuration file, you need to provide OpenAI API credentials and specify the model name and endpoint to use.

- Run the Code: Execute the corresponding code to start optimizing prompts.

Running PromptWizard with Training Data (Scenario 3) 📊

If you have training data, PromptWizard can support various datasets including:

Please note that optimization times may vary, but generally, most optimizations take about 20-30 minutes!

Running on GSM8k (AQUARAT/SVAMP)

To run optimizations on the GSM8k dataset:

- Configure the Azure endpoint in the

.envfile. - Follow the instructions in demo.ipynb to download the necessary data, and perform prompt optimization and inference.

Running on BBII

For the BBII dataset, adjust configurations in promptopt_config.yaml. Pay particular attention to task_description, base_instruction, and answer_format. For more details, refer to the demonstration in demo.ipynb.

Running Custom Datasets 🗃️

Creating Custom Datasets

Custom datasets must be formatted in .jsonl, ensuring each sample includes the following fields:

question: The complete question that you want the LLM to answer.answer: The corresponding true answer, which could be a detailed explanation or a concise response.

Steps for Custom Datasets

-

Dataset Structure: Organize your directory structure to include essential files and folders:

configs: Contains hyperparameters for optimization.data: Housestrain.jsonlandtest.jsonl..env: Stores environment variables for configuration.- A script file for executing the process (

.pyor.ipynb).

-

Setting Hyperparameters: Define optimization hyperparameters in

[promptopt_config.yaml](demos/gsm8k/configs/promptopt_config.yaml). -

Dataset Specific Class: Create a class that inherits from

DatasetSpecificProcessingto implement necessary answer extraction methods.

How PromptWizard Works 🔍

PromptWizard uses task descriptions and initial prompts to model multiple variants and selects the best variant based on performance metrics. ✨ It further optimizes through evaluation and improvement, guiding subsequent optimizations by distinguishing between good and bad examples.

- The framework generates reasoning chains using the Chain-of-Thought (CoT) approach, enhancing LLM’s problem-solving capabilities.

- It adjusts prompts by integrating task intent with expert roles to ensure better context understanding.

Configuration ⚕️

All hyperparameters related to prompt optimization are configured in the file located at [demos/gsm8k/configs/promptopt_config.yaml](demos/gsm8k/configs/promptopt_config.yaml). Key hyperparameters include:

mutate_refine_iterationsmutation_roundsrefine_task_eg_iterationsstyle_variationquestions_batch_sizemin_correct_countmax_eval_batchestop_nseen_set_sizefew_shot_count

These parameters will significantly affect the performance of your prompt optimization strategies!

Best Practices 💡

- Adjust the parameters in

promptopt_config.yamlbased on experimental results for optimal outcomes. - Apply user feedback effectively to enhance prompt optimization for specific tasks.

- Experiment with both synthetic and training context examples to see which provides best performance for your specific use case.

Results 📈

PromptWizard has demonstrated consistent performance across various tasks, achieving high accuracy! Performance overview curves illustrate its advantages over other leading methods, showcasing the immense potential of optimizing LLM use with prompts!✌️